To date, most technology has been human-created. It can be grouped into two categories, technologies that are not likely to have an immediate direct impact on human morphology, and those that might.

To date, most technology has been human-created. It can be grouped into two categories, technologies that are not likely to have an immediate direct impact on human morphology, and those that might.

Technologies that would likely not change human morphology

There could be the rapid advent of significantly more dramatic technologies than have been experienced to date. While these new technologies could change some aspects of life, human biological drives could remain unchanged, and therefore the structure and dynamics of human societal organization, interaction, and goal pursuit could also remain unchanged. Some examples of these advances could include the realization of molecular nanotechnology, quantum computing, cold fusion, and immortality. Even with several of these revolutionary technologies implemented, the seemingly different world would not actually be structurally different if humanity is still ordered around the same familiar biologically-driven goals.

Technologies that might change human morphology

The other group of technologies is those which could possibly have a near-term impact on the structure and form of what it means to be human, for example, cognitive augmentation, genomic therapies, and synthetic biology. The area with the greatest possible change is improving human mental capability. There have been several significant advances in a variety of neurology-related fields in the last few years that if ultimately realized, could potentially alter human morphology. Even the resolution of all mental pathologies such as Parkinson’s disease, depression, stroke rehabilitation, and addiction would constitute morphological change at a basic level. Augmenting cognition and deliberately managing biophysical states would constitute morphological change at other levels.

Sunday, December 26, 2010

Human morphology-changing technologies

Posted by LaBlogga at 8:20 AM View Comments

Labels: biophysical state management, cognition, enhancement, genomic therapies, human mental capability, intelligence, morphology, synthetic biology, technology, tools

Sunday, December 19, 2010

Morphological reach of technology

Is technology simply the tools that humanity has created to further its will or a force that can change humanity? Whether arrowheads or supercomputers, humans have made technology to enable and reinforce human nature and evolutionary tendencies. However, it is possible that advanced technology could actually change humans, human nature, and biological drives, both unintentionally and by design.

Is technology simply the tools that humanity has created to further its will or a force that can change humanity? Whether arrowheads or supercomputers, humans have made technology to enable and reinforce human nature and evolutionary tendencies. However, it is possible that advanced technology could actually change humans, human nature, and biological drives, both unintentionally and by design.

Posted by LaBlogga at 9:50 AM View Comments

Labels: intelligence, morphology, technology, tools

Sunday, December 12, 2010

Supercomputers surpass 2.5 petaflops

The biannual list of the world's fastest supercomputers was released on November 13, 2010. For the first time, supercomputing capability surpassed 2.5 petaflops with the world's fastest supercomputer, the Tianhe-1A - NUDT TH MPP, X5670 2.93Ghz 6C, NVIDIA GPU, FT-1000 8C NUDT, at the National Supercomputing Center in Tianjin China, clocking in at over 2.5 petaflops.

The biannual list of the world's fastest supercomputers was released on November 13, 2010. For the first time, supercomputing capability surpassed 2.5 petaflops with the world's fastest supercomputer, the Tianhe-1A - NUDT TH MPP, X5670 2.93Ghz 6C, NVIDIA GPU, FT-1000 8C NUDT, at the National Supercomputing Center in Tianjin China, clocking in at over 2.5 petaflops.

Figure 1 illustrates how supercomputing power has been growing in the last five years, starting at (a paltry) 136.8 gigaflops in June 2005, and experiencing four solid doublings in growth. This rate of progress is estimated to continue, and usher in the exaflop era of supercomputing by mid-decade. The IBM Roadrunner at Los Alamos was the first to achieve speeds over one petaflop in June 2008 and held onto the fastest computer seat for three measurement periods, then was surpassed by the Cray Jaguar at Oakridge for two measurement periods. China has now captured the fastest supercomputer ranking with its NUDT MPP.

The world's supercomputers are working on many challenging problems in areas such as physics, energy, and climate modeling. A natural question arises as to how soon human neural simulation may be conducted with supercomputers. It is a challenging problem since neural activity has a different architecture than supercomputing activity. Signal transmission is different in biological systems, with a variety of parameters such as context and continuum determining the quality and quantity of signals. Distributed computing systems might be better geared to processing problems in a fashion similar to that of the brain. The largest current project in distributed computing, Stanford protein Folding@home, reached 5 petaflops in computing capacity in early 2009, just as supercomputers were reaching 1 petaflop. The network continues to focus on modeling protein folding but could eventually be extended to other problem spaces.

Posted by LaBlogga at 1:52 PM View Comments

Labels: alternative intelligence, chip architecture, cognition, computer networks, distributed processed, petaflop, protein folding at home, signal transmission, supercomputing

Sunday, December 05, 2010

Bay area aging meeting summary

In the second Bay Area Aging Meeting, held at Stanford on December 4, 2010, research was presented regarding attempts to further elucidate and characterize the processes of aging, primarily in model organisms such as yeast, C. elegans (worms), and mice. A detailed summary of the sessions is available here. The work spanned some repeating themes in aging research:

In the second Bay Area Aging Meeting, held at Stanford on December 4, 2010, research was presented regarding attempts to further elucidate and characterize the processes of aging, primarily in model organisms such as yeast, C. elegans (worms), and mice. A detailed summary of the sessions is available here. The work spanned some repeating themes in aging research:

Theme: processes work in younger organisms but not in older organisms

A common theme in aging is that processes function well in the first half of an organism’s life, then break-down in the second half, particularly the last 20% of the lifespan. In one example, visualizations and animations were created from the 3D tissue-sectioning of the intestine of young (4 days old) and old (20 days old) C. elegans. In the younger worms, nuclei and cells were homogenous and regularly spaced over the course of the intestine running down the length of the worm. In older worms, nuclei disappeared (an initial 30 sometimes ultimately dropped to 10), and the intestine became twisted and alternately shrunken and convoluted due to DNA accumulation and bacterial build-up.

Theme: metabolism and oxidation critically influence aging processes

Two interesting talks concerned UCP2 (mitochondrial uncoupling protein 2), an enzyme which reduces the rate of ATP synthesis and regulates bioenergy balance. UCP2 and UCP3 have an important but not yet fully understood role in regulating ROS (reactive oxygen species) and overall metabolic function, possibly by allowing protons to enter the mitochondria without oxidative phosphorylation. The mechanism was explored in results that worm lifespan was extended by inserting zebrafish UCP2 genes (not natively present in the worm).

Theme: immune system becomes compromised in older organisms

Two talks addressed the issue of immune system compromise. One team created a predictive analysis that could be used to assess an individual’s immune profile and potential response to vaccines by evaluating demographics, chronic infection status, gene expression data, cytokine levels, and cell subset function. Other work looked into the specific mechanisms that may degrade immune systems in older organisms. SIRT1 (an enzyme related to cell regulation) levels decline with age. This leads to the instable acetylation of transcription factor FoxP3 (a gene involved in immune system response), which suppresses the immune system by reducing regulatory T cell (Treg) differentiation to respond to pathogens.

Theme: systems-level understanding of aging processes

Many aging processes are systemic in nature with complex branching pathways and unclear causality. Research was presented regarding two areas: p53 pathway initiation and amyloid beta plaque generation. P53 is a critical tumor suppressor protein controlling many processes related to aging and cell maintenance: cell division, apoptosis, and senescence, and is estimated to be mutated in 50% of cancers. Research suggested that more clues for understanding the multifactorial p53 pathway could come from SnoN, which may be an alternative mechanism for activating p53 as part of cellular stress response. Neurodegenerative pathologies such as Alzheimer’s disease remain unsolved problems in aging. For example, it is not known if the amyloid beta plaques that arise are causal, or a protection mechanism in response to other causal agents. Some research looked at where amyloid beta is produced in cells, finding that after the amyloid precursor protein (APP) leaves the endosome, both the Golgi and a related recycling complex may be related in the generation of amyloid beta.

Theme: lack of conservation progressing up the model organism chain

Aging and other biological processes become more complicated with progression up the chain of model organisms. What works in yeast and worms may not work in mice, and what works in mice and rats may not work in humans. Some interesting research looked at ribosomal proteins, whose deletion is known to extend lifespan in model organisms. The key points were first that there was fairly little (perhaps less than 20%) overlap in lifespan-extending ribosomal protein deletions conserved between yeast and worms. Second, an examination of some of the shared deletions in mice (especially RPL19, 22, and 29) found some conservation (e.g.; RPL29), and also underlined the systemic-nature of biology, finding that other homologous genes (e.g.; RPL22L (“-like”)) may compensate for the deletion, and thereby not extend lifespan.

Theme: trade-offs is a key dynamic of aging processes

The idea of trade-offs is another common theme in aging; the trade-offs between processes, resource consumption, and selection. Exemplar of this was research showing that the deletion of a single gene involved in lipid synthesis, DGAT1, is beneficial and promotes longevity in mice when calories are abundant, but is also crucial for survival in calorie restricted situations. This supports the use of directed methylation to turn genes on and off in different situations. More details were presented in a second area of trade-offs: reproduction-lifespan. It is known that reproduction is costly and organisms without reproductive mechanisms may have extended lifespans. Research examined the specific pathways, finding that Wnt and steroid hormone signaling in germline and somatic reproductive tissues influenced worm longevity, particularly through non-canonical (e.g.; not the usual) pathways by involving signaling components MOM-2/Wnt and WRM-1/beta-catenin.

Conclusion

Academic aging research is continually making progress in the painstaking characterization of specific biological phenomena in model organisms, however the question naturally arises as to when and how the findings may be applied in humans for improving lifespan and healthspan. In fact there is a fair degree of activity in applied human aging research. Just as more individuals are starting to include genomic medicine, preventive medicine, and baseline wellness marker measurement in health self-management, so too are they consulting with longevity doctors. One challenge is that at present it is incumbent on individuals to independently research doctors and treatments. Hopefully in the future there could be a standard list of the anti-aging therapies that longevity doctors would typically offer. Meanwhile, one significant way for an individual to start taking action is by self-tracking: measuring a variety of biomarkers, for example annual blood tests, and exercise, weight, nutritional intake, supplements, and sleep on a more frequent basis.

Posted by LaBlogga at 1:05 PM View Comments

Labels: aging, amyloid beta plaque, bioenergy, conference, DGAT1, FoxP3, immune system, neurodegenerative, ouroboros, p53, ribosomal protein deletions, SIRT1, UCP, Wnt, zebrafish

Sunday, November 28, 2010

Multiworld ethics and infinitarian paralysis

In an interesting 2008 essay (The Infinitarian Challenge to Aggregative Ethics), Oxford futurist scholar Nick Bostrom considers some of the issues that may arise in multiworld ethics. The central focus is on the theme of infinitarian paralysis, that individuals may not act since they think their impact is too small to matter. This is not a new theme; a classic example is not voting thinking that one voice does not matter. However, when considered in an infinite multiworld sense where every permutation of every individual and their actions exists elsewhere, perhaps individual voices really do not matter…and individual agents in any world could experience infinitarian paralysis.

In an interesting 2008 essay (The Infinitarian Challenge to Aggregative Ethics), Oxford futurist scholar Nick Bostrom considers some of the issues that may arise in multiworld ethics. The central focus is on the theme of infinitarian paralysis, that individuals may not act since they think their impact is too small to matter. This is not a new theme; a classic example is not voting thinking that one voice does not matter. However, when considered in an infinite multiworld sense where every permutation of every individual and their actions exists elsewhere, perhaps individual voices really do not matter…and individual agents in any world could experience infinitarian paralysis.

As the essay suggests, humans may be able to qualify and circumscribe the issue of infinitarian paralysis and live and act unconcernedly in the current world. While this may be possible now, as humans become more rational through augmentation, and with the potential advent of artificial intelligence and hybrid beings, the specter of infinitarian paralysis may be harder to ignore. The inherent irrationality of humans together with the skill of ubiquitous rationalization is part of the cohesion of modern society. However, in a post-scarcity economy for material goods where the more immediate exigencies of living in the current world have evaporated, and biologically-derived utility functions have been re-designed, philosophical inconsistencies could well occupy a higher level of concern for thought-driven beings.

Posted by LaBlogga at 12:56 PM View Comments

Labels: aggregative ethics, bostrom, infinitarian challenge, infinitarian paralysis, local actions, meaning, ubiquitous rationalization, unitary thinking

Sunday, November 21, 2010

Evolutionary adaptations and artificial intelligence

Art and religion are human evolutionary adaptations. Are there similar evolutionary adaptations that human-level and beyond artificial intelligence would be likely to make? Another way to ask this is whether art and religion were predictable? It seems that they were, maybe not the detailed outcomes, but that mechanisms would arise to allow for the achievement of human objectives such as status-garnering and mate selection.

Art and religion are human evolutionary adaptations. Are there similar evolutionary adaptations that human-level and beyond artificial intelligence would be likely to make? Another way to ask this is whether art and religion were predictable? It seems that they were, maybe not the detailed outcomes, but that mechanisms would arise to allow for the achievement of human objectives such as status-garnering and mate selection.

Likewise, it seems quite possible that human-level and beyond artificial intelligence would be likely to make evolutionary adaptations. Utility functions could be edited in many ways. The primary area could be performance optimization, continuously improving cognition and other operations. A second area could be related to societal objectives to the extent that artificial intelligence is present in communities. Artificial intelligence might not have art and religion, but could have related mechanisms for achieving external and internal purposes.

Posted by LaBlogga at 12:14 PM View Comments

Labels: adaptation, art, artificial intelligence, evolution, future of intelligence, intelligence, status, utility function

Sunday, November 14, 2010

Cognitive enhancement through longer Schwann cells

Do faster thinkers have longer Schwann cells? Evolution has optimized human brain signal transmission in the existing machinery. Schwann cells (nerve cells) are punctuated with Nodes of Ranvier, unwrapped spaces that occur regularly on axons between the myelin sheaths that wrap and insulate the axons. The Nodes of Ranvier make signal transmission faster and conserve energy.

Do faster thinkers have longer Schwann cells? Evolution has optimized human brain signal transmission in the existing machinery. Schwann cells (nerve cells) are punctuated with Nodes of Ranvier, unwrapped spaces that occur regularly on axons between the myelin sheaths that wrap and insulate the axons. The Nodes of Ranvier make signal transmission faster and conserve energy.

While Schwann cells may be the minimal length for the selection processes of evolution, they may not be optimized for modern thought. In many cases, modern thought constitutes more reasoning, imagining, conceptualizing, and contemplation than fight or flight responses. Different brain patterns arise from the different kinds of thought. One path to cognitive enhancement could be lengthening Schwann cells for faster transmission and possibly faster cognition.

Posted by LaBlogga at 5:05 PM View Comments

Labels: axon, brain, cognition, cognitive enhancement, Human body 2.0, human redesign, intelligence, Schwann cells, thought

Sunday, November 07, 2010

Human body 2.0

The human body is a complex intricate composite of millions of years of evolution, but even the most cursory review immediately suggests the potential benefits of redesign. Without considering the deep possibilities and eventual exigencies of augmentation, it might be possible to replicate the full existing functionality of a human in a much smaller energy-conscious form factor, perhaps 1/10 the current size.

The human body is a complex intricate composite of millions of years of evolution, but even the most cursory review immediately suggests the potential benefits of redesign. Without considering the deep possibilities and eventual exigencies of augmentation, it might be possible to replicate the full existing functionality of a human in a much smaller energy-conscious form factor, perhaps 1/10 the current size.

Waste: materials generation and energy expenditure

The biggest theme is waste due to untargeted processes. The main forms of waste are excess materials generated and energy used to move them around, all of which require a big overall system. Unlike the latest drugs, most natural molecules are untargeted; they travel around the body until they bump into a place to bind or are expelled unused. It is not just waste running through the blood stream and circulatory system, for example, steroid hormones diffuse into all cells, only binding to a small number.

Optimizing systems without sacrificing functionality

It could be argued that there are benefits to redundancy and waste could be better in some systems than others, for example, the value of having an extensive immune system on patrol 24/7 even though unused cell turnover is high. However, optimization could likely improve all system parameters.

Redesign phases: improved targeting and receptor enhancement

A potential human body redesign would certainly occur in phases, revising a few lower-impact operations first, targeting certain small classes of proteins to existing receptors for example. A second phase could include the generation or specificity-enhancement of receptors for a finer resolution of targeting.

Eventually, with robust targeting, it might be possible for the body to pump around 90% less ‘stuff.’This would imply tremendous energy savings, and in turn decreased requirements for fluid, nourishment, vitamin, and mineral intake, and could even slow aging as processes do not wear out as fast.

Form factor hybrids

A smaller form factor may be undesirable for many reasons, but presumably it would be possible for streamlined wetware systems to merge into different kinds of hybrid form factors with ultralight ultrastrong hardware.

Posted by LaBlogga at 4:43 PM View Comments

Labels: ephemeral form factor, form factor, functionality fungibility, future of intelligence, green humans, Human body 2.0, human substrate, purpose-based design, redesigning humans

Sunday, October 31, 2010

Synbio in space

Many interesting applications of synthetic biology in space missions were discussed at the Synthetic Biology workshop held October 30-31, 2010 at NASA Ames in conjunction with the National Academies Keck Futures Initiative. Scientists from a variety of backgrounds came together to brainstorm solutions in an integrative approach. The most impressive aspect was how different areas of synthetic biology have been progressing enough to discuss ideas and techniques that could be applied to space missions in a robust way.

Many interesting applications of synthetic biology in space missions were discussed at the Synthetic Biology workshop held October 30-31, 2010 at NASA Ames in conjunction with the National Academies Keck Futures Initiative. Scientists from a variety of backgrounds came together to brainstorm solutions in an integrative approach. The most impressive aspect was how different areas of synthetic biology have been progressing enough to discuss ideas and techniques that could be applied to space missions in a robust way.

Environment enhancement

One of the most important areas that synthetic biology may be able to help with is in making space environments more manageable and habitable by humans. The regolith, the powdery blanket covering the moon and Mars, may likely need to be ameliorated into harder less dusty surfaces.

Biomining

Synthetic biology could be helpful in creating microbes to faster weather regolith/rock for an order of magnitude quicker release of bioessential elements such as Magnesium, Calcium, Potassium, and Iron. (related publications)

Biomaterials and self-building habitats

Synthetic biology could help to create microbes for use in building structures, both as scaffolds and by growing on scaffolds. Bacterially-generated alternatives to Portland cement (bricks made from bacteria, sand, calcium chloride, and urea) are currently being investigated, along with other plant-development inspired architectures.

New gene function

While the discovery of new mammalian genes has become saturated, the majority of newly sequenced ocean-based microbes continue to have novel gene functions. Some of these may be quite useful in space environments, for example, D. radiodurans, which can withstand significant radiation and rebuild its DNA when damaged.

Space economics – the Basalt Economy

The economics of space suggest that synthetic biological solutions might be developed more readily for space challenges, and later deployed on Earth as the technologies mature. The main constraint for space is developing in-situ solutions that are cheaper than lifting materials from Earth, as opposed to creating competitive products for Earth-based supply chains (e.g.; synthetic biofuel).

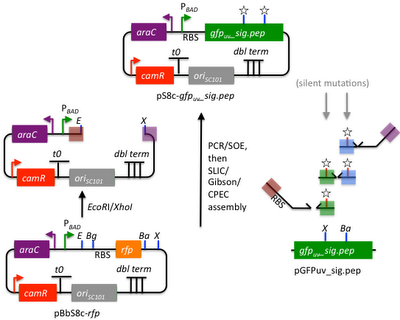

Tools

Whole human genome and metabiome sequencing, genome synthesis and assembly, and genetic design and proofing software (bioCAD) as shown in Figure 1 are all improving. A vast industry similar to that of semiconductor design and manufacture could likely develop for synthetic biology.

Posted by LaBlogga at 4:23 PM View Comments

Labels: basalt economy, bioCAD, biomining, Genomics, Keck, NASA, regolith, sequencing, space habitation, synbio, synthetic biology

Sunday, October 24, 2010

Future of fashion

A new idea, spray-painted clothing, joins digital textile printing and 3-D printed clothing as a possible tool for creating the future of fashion.

A new idea, spray-painted clothing, joins digital textile printing and 3-D printed clothing as a possible tool for creating the future of fashion.

Spanish fashion designer Manel Torres, working together with scientists from University College London, has developed spray-on fabric (Figure 1). Short fibers of wool, linen or acrylic are mixed with a polymer solvent which binds immediately as they are sprayed onto a person or mannequin.

Clothing made from spray-on fabric was presented at the Science in Style fashion show, September 21, 2010 in London (Figure 2).

There are many speculations about the wide range of potential applications for spray-on fabric. Some of the obvious ideas for on-demand fabric are sterile bandages and military and crisis relief use. Practical uses are interesting too. For example, to the extent the material is recyclable and cost-effective, being able to spray-on additional layers when feeling cold, and easily remove and discard them when hot could be quite convenient. Rain gear could be similarly donned and discarded. The long-expected futurist’s dream of on-demand 3D clothing printing booths could be closer to being realized.

Posted by LaBlogga at 11:29 AM View Comments

Labels: 3-D printing, clothing, fashion, garment, micro-manufacturing, on-demand material goods, personalization, personalized manufacturing, post-scarcity economy, spray-on fabric

Sunday, October 17, 2010

Phase transition in intelligence

There could be at least three approaches to the long-term future of intelligence: engineering life into technology, simulated intelligence, and artificial intelligence. Further, while the story of evolutionary history is the domination of one form of intelligence, the future could hold ecosystems with multiple kinds of intelligence, particular specialized by purpose/task.

There could be at least three approaches to the long-term future of intelligence: engineering life into technology, simulated intelligence, and artificial intelligence. Further, while the story of evolutionary history is the domination of one form of intelligence, the future could hold ecosystems with multiple kinds of intelligence, particular specialized by purpose/task.

There are significant technical hurdles in executing simulated intelligence and artificial intelligence, but the areas have been progressing in Moore’s Law fashion. The engineering of life into technology will need to proceed expediently to keep pace with technological advance, and tie a lot of wetware loose ends together.

At present, the mutation rate of genetic replication puts an upward bound on how complex biological organisms can be. The human cannot be more than about 10x as complex as Drosophila (the fruit fly), for example. However, if the error rate in the genetic replication machinery could be improved, maybe it would be possible to have organisms 10x more complex than humans, and so on, and so on…

Posted by LaBlogga at 8:27 PM View Comments

Labels: artificial intelligence, complexity, future of intelligence, genetic replication, information optimization, intelligence, mutation rate, phase transition, simulated intelligence

Sunday, October 10, 2010

Consumer genomic testing update

In the wake of expected industry-wide regulation of consumer genomic testing, two of the big four testing companies, Navigenics and Pathway Genomics, have pulled their direct-to-consumer offerings in the last few months. Now a doctor must order their tests.

In the wake of expected industry-wide regulation of consumer genomic testing, two of the big four testing companies, Navigenics and Pathway Genomics, have pulled their direct-to-consumer offerings in the last few months. Now a doctor must order their tests.

23andMe and deCODEme still have consumer genomic tests available, covering 174 conditions for $429 and 49 conditions for $2,000, respectively (Figure 1). Sooner rather than later could be a good time to sign up for a genomic service, possibly using year-end HSA dollars.

The potential industry-wide regulation is in regard to two issues, one is whether a physician must order the tests, and two, whether companies should be able to publish their interpretations of the results.

The DIYgenomics website lists two online petitions in support of rights to one's own genetic data:

Posted by LaBlogga at 11:25 AM View Comments

Labels: 23andme, activism, applied genomics, consumer genomics, data rights, deCODEme, navigenics, pathway genomics, personal genomics, petition

Sunday, October 03, 2010

Hardware apps (smartphone peripherals)

Apps are not just software anymore! The interesting new field of hardware apps, or smartphone peripherals, is under development.

Apps are not just software anymore! The interesting new field of hardware apps, or smartphone peripherals, is under development.

One example is the iPhly iPhone-based radio controller. Radar detectors and dashboard car-surveillance cams could follow. Earthquake sensors are another obvious application, as accelerometer chips for sensing earthquakes have already been used in laptops.

Miniaturized modules could snap onto smartphones for many different applications. Scientific instruments could be an interesting application area, giving any individual the opportunity to have a mobile lab. Ultrasound and portable microscopes have already been demonstrated (iPhone attachment, cell phone attachment).

Portable personal sensing modules for biodefense (iPhone biodefense app spec) and health optimization could be a killer app. Microneedle arrays could continuously or periodically perform a sampling of hundreds of blood-based data points like Orsense does for continuous glucose monitoring. Mass spectrometer attachments could identify any substance chemically. Miniaturized genome sequencers and RNA sequencers could identify the underlying DNA and expression profiles of samples.

Posted by LaBlogga at 11:48 AM View Comments

Labels: apps, biodefense, biomonitor, biosensing, hardware apps, peripherals, smartphone

Sunday, September 26, 2010

Future Art

Art interprets, defines, and responds to the social and intellectual milieu of any era.

Art interprets, defines, and responds to the social and intellectual milieu of any era.

Impressionists reacted to the precise definition of the day, somewhat from the newtech, photography, by making things more blurry. Modern art reacted to everything having been made visible by transcending into the conceptual and abstract. What is the iconic reaction that could drive the contemporary art of today into a radical new era?

Computers, data flows, communications, machines, computation, and software are the hallmark of today’s culture. Their ubiquity and influence invites rejection and commentary, and the anticipated response has been portrayed in dystopian machine art.

Beyond the obvious rejection of the machine era, computers, software, and data provide a vibrant new medium for art. For example, data visualization could be seen as art modulated with information.

Sentiment engines have become the art and barometer of worldwide emotion, for example We Feel Fine (blogs), and Pulse of a Nation (Twitter).

The time lapse photography concept used for flight pattern visualization (US, worldwide) could be extended to other areas.

Software code base data visualization

One form of contemporary art could be the sped-up visualization of code commits to software repositories for large-scale projects. The code might look like living organisms, possibly interesting new species. The fast flash of DOS is the prokaryote to Windows 7 and Mac OS X eukaryotes. iPhone could be the raptor to the Android’s human.

Posted by LaBlogga at 11:37 AM View Comments

Labels: art, code base, commentary, creativity, data visualization, expression, information modulation, information visualization, interpretation, reaction, software

Sunday, September 19, 2010

Real-time economy feeds and ambient economics

Social economic networks are just one part of the broader context of the transformational economics that may eventually lead to a post-scarcity economy for material goods. In addition monetizing alternative currencies and unlocking previously inaccessible value, social economic networks are also generating a critical meta asset: billions of data points which can be aggregated into real-time economy feeds.

Social economic networks are just one part of the broader context of the transformational economics that may eventually lead to a post-scarcity economy for material goods. In addition monetizing alternative currencies and unlocking previously inaccessible value, social economic networks are also generating a critical meta asset: billions of data points which can be aggregated into real-time economy feeds.

Posted by LaBlogga at 5:54 PM View Comments

Labels: aggregation, ambient economics, asset creation, economy 2.0, prediction, real-time economy feed, social business intelligence, social economic networks, transformational economics

Sunday, September 12, 2010

Personal genome: data analysis challenge

Five themes emerged from the material presented at the 3rd annual personal genomes meeting at Cold Spring Harbor Laboratory held September 10-12, 2010.

Five themes emerged from the material presented at the 3rd annual personal genomes meeting at Cold Spring Harbor Laboratory held September 10-12, 2010.

Posted by LaBlogga at 12:23 PM View Comments

Labels: cancer, cancer genomics, conference, DNA, genome, personal genome, sequencing, structural variation

Sunday, September 05, 2010

Crowdsourcing: labor-as-a-service marketplaces

The dynamism of the information economy, the internet, and the recession have forced and allowed the flowering of labor-as-a-service marketplaces. Individuals can self-direct their professional activity with greater empowerment, and service-consumers can be more selective and focused in their purchase of labor services through crowdsourcing. More productive use of time can occur for both service-providers and service-consumers.

The dynamism of the information economy, the internet, and the recession have forced and allowed the flowering of labor-as-a-service marketplaces. Individuals can self-direct their professional activity with greater empowerment, and service-consumers can be more selective and focused in their purchase of labor services through crowdsourcing. More productive use of time can occur for both service-providers and service-consumers.

Work is becoming quantized.

Posted by LaBlogga at 8:35 AM View Comments

Labels: crowdconf2010, crowdsourcing, economics, economy 2.0, freelance camp, labor, labor as a service, labor marketplace, resource fungibility, service economy, work

Sunday, August 29, 2010

Anticipative demand and consumer intent prediction

Social economic networks improve the value proposition for both individual and institutional consumers since products and services can be discovered and targeted with greater relevancy.

Social economic networks improve the value proposition for both individual and institutional consumers since products and services can be discovered and targeted with greater relevancy.

Rich attribute information posted publicly by social media users (individual and institutional) can be used by marketers and other interested parties (for example, potential employees) to infer the values, preferences, and interests of others. In response, hyper-personalized advertisements may be presented (Reference: Shih, The Facebook Era, 2009).

Hyper-targeted-marketing, recommendations, and authentic product endorsements from friends are some of the ways that social economic networks have improved commerce relevancy.

The next obvious step would be for vendors to predict demand before it occurs, responding to customer intention.Intention prediction could be accomplished by merging aggregate Facebook or other social media ‘likes’ and comments from high-influence users into purchase intent well before sales transactions. An anticipative demand market could arise.

Traditional economics equations could be further transformed as vendors test large varieties of targeted offerings in cost-effective ways via the internet.

The long-tail of supply and demand could meet in millions of micro-markets, possibly even at the level of individual pricing and individual offerings. Smaller lot sizes means higher margin. There are numerous entrepreneurial opportunities in facilitating product and service generation, production, and distribution at the level of n=1.

Posted by LaBlogga at 9:35 AM View Comments

Labels: anticipative demand, asset creation, intent, intent prediction, intention, prediction markets, relevancy, social business intelligence, social currency, social economic networks

Sunday, August 22, 2010

Social currency unlocks value for individuals and organizations

Social currency, shared information which encourages further social encounters, is transacted through social economic networks.

Social currency, shared information which encourages further social encounters, is transacted through social economic networks.

From an economic perspective, the role of social economic networks is to unlock and monetize hidden value. This value is mainly in the form of information asymmetry as buyers and sellers hold information that is useful to each other. Both buyer and seller realize greater utility than if the social economic network did not exist.

Social economic networks are impacting both individuals and organizations at the global and local level. Transactions may be between anonymous parties or parties who know each other. For example, in the Groupon / Peixe Urbano group-purchasing model, individuals pre-commit to a purchase to obtain a discount if a minimum number of people participate.

With social economic networks, organizations can unlock intellectual capital (social business intelligence) in ways that were not possible before with tools such as prediction markets (a mechanism for collecting and aggregating opinion using market principles). The concepts are attractive on a global level and can be implemented on a local level with the democratizing power of the internet.

Social economic networks could likely grow in the next several years, particularly in the type of assets created, and in the venues and models for their exchange.

Posted by LaBlogga at 4:42 AM View Comments

Labels: asset creation, prediction markets, social business intelligence, social currency, social economic networks

Sunday, August 15, 2010

Social economic networks and the new intangibles

Social economic networks are helping to monetize the new intangibles that arise from alternative currencies such as intention, attention, time, ideas, creativity, and health data. Individuals are starting to realize that they have more assets that have economic value besides labor; multiple currencies that are starting to become monetizable.

Social economic networks are helping to monetize the new intangibles that arise from alternative currencies such as intention, attention, time, ideas, creativity, and health data. Individuals are starting to realize that they have more assets that have economic value besides labor; multiple currencies that are starting to become monetizable.

The new currencies have new measurement metrics for monetization such as awareness, influence, authenticity, reach, action, engagement, impact, spread, connectedness, velocity, participation, shared values, and presence. As market principles become the norm for intangible resource allocation and exchange, all market agents are starting to have a more intuitive and pervasive concept of exchange and reciprocity.

Reputation has always been an important intangible asset, and was one of the first alternative currencies cited; however it was not really monetizable other than as an attribute of labor capital. Now, there are more alternative currencies, such as social currency, that are directly monetizable through social economic networks.

Real value and real assets are being created in social economic networks. For example, products and services have higher value when they are recommended. An information asset that has been generated, largely through crowd-sourced labor, is product and service recommendations. Some examples of these information resources that facilitate shopping include Amazon reviews, Yelp local business recommendations, social shopping sites (e.g.; Kaboodle, Polyvore, StyleHive, ThisNext), and product attribute discovery and dialogue sites (e.g.; Stickybits, ClickZ).

Posted by LaBlogga at 7:57 PM View Comments

Labels: alternative currencies, asset, economy 3.0, intangibles, social currency, social economic networks, values

Sunday, August 08, 2010

Long-tail economics extended to physical objects

Chris Anderson, editor of WIRED magazine, gave an excellent talk on August 5, 2010 at the PARC Forum. He explained how the long-tail economic models which have driven digital content (allowing consumers to access books, music, and movies in the 80% of the market that is not blockbusters) are now starting to appear in the world of physical goods.

Chris Anderson, editor of WIRED magazine, gave an excellent talk on August 5, 2010 at the PARC Forum. He explained how the long-tail economic models which have driven digital content (allowing consumers to access books, music, and movies in the 80% of the market that is not blockbusters) are now starting to appear in the world of physical goods.

The process of realizing long-tail economics in any sector is that of going one-to-many; democratizing the tools of creation, then the tools of production, and finally the tools of distribution. This is what happened with internet content such as publishing, where it is now easy for anyone to create, produce, and distribute content with blogs, twitter feeds, YouTube, etc. This has also happened with other digital content and some physical goods that are ordered and distributed via internet models (e.g.; Amazon, Zappos, etc.).

The new industrial revolution, argues Anderson, is in opensource hardware factories. The supply chain has now opened up to the digital and the small. The ability to make and distribute anything massively decentralizes traditional manufacturing and could completely reorganize industrial economies…atoms are the new bits. Matthew Sobol’s holons (communities of local resilience and sustainability) are in the works. Goods can be self-designed or crafted from available digital designs (e.g.; communities like ShapeWays and Ponoko), and then printed locally on the MakerBot or ordered from Alibaba or other global manufacturies. Opensource manufacturing is starting to have an impact on industries like auto design and construction (e.g.; Local Motors), drones (e.g.; DIY Drones), and general hardware design (empowered by the Beagle Board and Arduino).

It is likely that long-tail economics can be applied to many other areas. Medicine is the next obvious example, where health care, health maintenance, drug development, and disease treatment are already starting to shift into n=1 or n=small group tiers of greater customization and ideally, lower cost as more precision is obtained in the measuring and understanding of disease and wellness.

Posted by LaBlogga at 7:36 AM View Comments

Labels: design, economics, entrepreneur, hardware, long-tail, long-tail economics, long-tail medicine, micro-manufacturing, opensource, personalization, post-scarcity economy

Sunday, August 01, 2010

The real-time economy

The encephalization of the earth (the coming together of human minds in a variety of higher resolution formats) is picking up speed. More and more people have a pass to join the information highway – Honest Signals points out that ten years ago, less than half the world’s population had made a phone call, but today, 70% of the world’s population has access to a phone for calls and texting.

The encephalization of the earth (the coming together of human minds in a variety of higher resolution formats) is picking up speed. More and more people have a pass to join the information highway – Honest Signals points out that ten years ago, less than half the world’s population had made a phone call, but today, 70% of the world’s population has access to a phone for calls and texting.

Numerous collective intelligence tools have been emerging to uplevel and structure the context and quality of human interaction. The Wikipedia is the quintessential collective intelligence tool, as are wikis generally, social networks, buzzing and tweeting status updates, collaboration websites, question networks, social purchasing networks, and prediction markets.

The real-time economy

The pulse of activity from collective intelligence tools can be measured in many ways, and is importantly surfacing as a leading indicator for the real-time economy. Prediction markets and social purchasing networks could supplant traditional mechanisms for identifying economic activity and shifts.

For example, there were a number of ways to watch the real-time status of this week’s long-awaited launch of Starcraft II: Wings of Liberty (Blizzard Entertainment’s latest release in one of the highest grossing video game franchises of all time). The usual tweet stream and buzz feed provided an instant indication of activity. The next highest level was the Blippy feed of real-time purchases. Third, of greatest salience was the SimExchange video game prediction market which provided the highest resolution aggregation of opinion, and was early in calling the sales disappointment during the game's pre-launch and launch (Figures 1-2).

The next obvious step is predicting economic activity even earlier, merging aggregate Facebook likes with social network high-influencer opinions into purchase intent well before sales transactions, in fact, using the encephalization ether for market demand identification and product development.

Posted by LaBlogga at 8:27 AM View Comments

Labels: collective intelligence, economics, economy 2.0, prediction markets, real-time economy

Sunday, July 25, 2010

Citizen scientists innovate research model

It is clear that quantified self-tracking, preventive medicine, and community-based research conducted by citizen scientists could be a huge new industry. The coming era of very large data sets and continuously collected information with algorithmic tools for signal-to-noise interpretation could significantly shift how the baseline measures of health are defined and managed. Citizen science is re-innovating the model for conducting science at every level.

It is clear that quantified self-tracking, preventive medicine, and community-based research conducted by citizen scientists could be a huge new industry. The coming era of very large data sets and continuously collected information with algorithmic tools for signal-to-noise interpretation could significantly shift how the baseline measures of health are defined and managed. Citizen science is re-innovating the model for conducting science at every level.

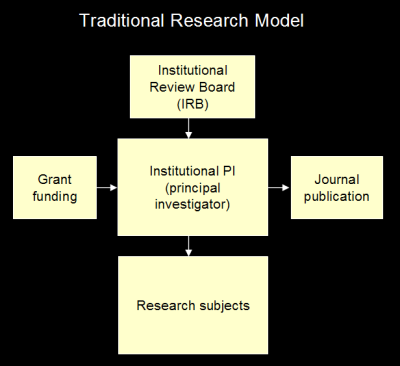

In the traditional research model (Figure 1), there is a principal investigator at an institution, conducting research on subjects, under the purview of an Institutional Review Board, supported by grant funding and publishing results in journals.

The citizen science model (Figure 2) is different as there is no distinction between investigator and subject, everyone is an investigator and participant; therefore the analog to the IRB is different, perhaps there are citizen ethicists, or a list of FAQs. Funding could come from patient advocacy groups, innovative research foundations, social venture capital, and crowd-sourcing; and research results could be self-published on the web.

(source: DIYgenomics - an open platform for citizen science research)

Posted by LaBlogga at 7:48 AM View Comments

Labels: big data, citizen science, open science, preventive medicine, quantified self, research, self-tracking

Sunday, July 18, 2010

Blood tests 2.0: finger-stick and microneedle array

The farther future could include smartpatches - non-invasive, invisible, continuously-worn health self-monitoring skin patches. In the nearer future, a killer app for synthetic biology and other new chemistry and biology 2.0 methods could be the ability to create one's own vitamin supplements, and possibly innovate low-cost, non-prescription based finger-stick blood tests, and saliva and urine panels for self-testing.

The farther future could include smartpatches - non-invasive, invisible, continuously-worn health self-monitoring skin patches. In the nearer future, a killer app for synthetic biology and other new chemistry and biology 2.0 methods could be the ability to create one's own vitamin supplements, and possibly innovate low-cost, non-prescription based finger-stick blood tests, and saliva and urine panels for self-testing.

Barrier to Citizen Science

A significant barrier to the wide-scale adoption of citizen science in the context of health self-management, intervention exploration, and preventive medicine implementation is the high cost, inconvenience and discomfort involved in obtaining traditional lab tests. While many tests may be ordered in a direct-to-consumer fashion through DirectLabs, the Life Extension Foundation, and other websites, it still costs ~$100 per test.

The challenge is to identify the requisite chemistry and processes involved and see if it may be possible to make simple consumer-friendly finger-stick blood test cartridges, similar to the glucose and HDL measurement kits sold at drug stores, which self-experimenters may perform at home or at community biolabs. Continuous monitoring via microneedle arrays would be useful for self-tracking glucose levels and other markers. For example, there could be consumer-targeted versions of the devices being developed by Orsense.

Community labs and high-end home labs may include the (CLIA-waived) Cholestech LDX machine (~$2,000 for the machine + ~$5-10 per measurement cassette) which can assess eight different lipid profiles.

There is an urgent opportunity to expand the range of finger-stick measurement tests. Below is a Wish List of basic tests for one-off or comprehensive panel delivery.

Traditional Blood markers:

- Homocysteine

- Vitamin B-12

- Folate

- Vitamin D

- Creatinine

- eGFR

- Cortisol

- Calcium

- Iron

- Aldosterone

- Estrogen

- Progesterone

- Testosterone

- Estradiol

Posted by LaBlogga at 12:13 PM View Comments

Labels: biolabs, blood test, citizen science, diybio, health 2.0, health self-management, microneedle array, quantified self, synbio, synthetic biology

Sunday, July 11, 2010

Ethics of historical revivification

Thought experiment: Assuming a world or worlds without basic resource constraints, if technologically possible, would it be more humane or less human humane to revive dead persons from history? Even those recently dead could be out of sync with the current milieu. Obviously, there would need to be rehab programs as contemplated in science fiction, for example,

Thought experiment: Assuming a world or worlds without basic resource constraints, if technologically possible, would it be more humane or less human humane to revive dead persons from history? Even those recently dead could be out of sync with the current milieu. Obviously, there would need to be rehab programs as contemplated in science fiction, for example,

"Life 101: Introducing Genghis Khan to the iPhone"If is arguable that some large percent of dead persons would find enjoyment and utility in revivification.

The interpretation of the core rights of the individual could be different in the future. “Life, liberty, and the pursuit of happiness” seems immutable, even when considered in the possible farther future context of many worlds, uploaded mindfiles, and human/AI hybrid intelligences. However, how these principles are applied in practice could seem strange from different historical viewpoints.

Attributes that might be important to an individual now, for example embodiment or corporeality (being physically instantiated in a body), could well be moot in the future. On-demand instantiation could be a norm to complement digital mindfiles.

It could be queried whether revived historical persons should have the option to re-die? Dying and suicide could be much different conceptually in a digital upload culture. Choosing not to run your mindfile could be legal, but deleting it (and all backups) could be the equivalent of suicide, which is generally illegal in contemporary society.

Posted by LaBlogga at 9:06 AM View Comments

Labels: corporeality, digital mindfiles, ethics, instantiation, intelligence, Revivification, rights of the individual, uploading

Sunday, July 04, 2010

Automated nourishment

An interesting question arose from discussions at the recent IFTF annual forecast presentation - Would people be more or less healthy in a possible future era of 3-D food-printing on-demand?

An interesting question arose from discussions at the recent IFTF annual forecast presentation - Would people be more or less healthy in a possible future era of 3-D food-printing on-demand?

Both sides of the case can be seen. The immediate conclusion might be that if anyone could print any food item on-demand any time, people would over-consume and be less healthy.

However, one of the biggest mistakes that can occur when considering a potential future advance is thinking of it in isolation and not bringing forward all other aspects of technology and society to this point too. In an era with on-demand 3-D food printing, other changes are also likely to have occurred. The first and most obvious is that perhaps food content or accompanying supplements, drugs, and nutraceuticals have changed to mean that food that tastes good is not necessarily unhealthy, even when consumed in large quantities. Non-fat non-sugar substitutes may have become seamless matches in the quality of taste but not in caloric consumption. Today’s unhealthy foods could go the way of cigarettes. Automated calorie consumption data collection might finally be possible.

A second argument for why people would be more rather than less healthy in an era of on-demand 3-D food printing is the massively granular physical tracking and biofeedback tools that could exist for each person. Smarthouse and smartclothing data collection through scanning and air, toilet, and unnoticeable blood stick diagnostics could be inputs to a continuously updating personal digital health model (e.g.; the future analog to Entelos's virtual patient or Archimedes's virtual twin). A personal digital health model could be used to assess exactly which real-time nutritional adjustments are needed by an individual. An automated menu plan from the kitchen printer may be preferable for creating meals based on an individual’s known preferences and reactions together with real-time needs. The portable personal digital health model could be permissioned into restaurant settings to similarly allow for customized meal synthesis.

A third argument comes from considering the potential trajectories of social networking and behavior change research. Already peer social networks have a strong influence on individual behavior. One’s whole peer network or interest group could be encouraging healthy behavior via team-based support and/or competition. The advent and widespread adoption of self-tracking and social interaction tools could bring about a new sensibility and personal responsibility for health self-management. Optional financial incentives from insurance companies could further facilitate behavior change.

Conclusion

In any possible future, there could be different technologies and social behavioral norms influencing the process of human nourishment. Food could become healthier over time and unobtrusive self-tracking tools and financial incentives could shift people to automated menu plans. Healthier behavior could be triggered just by making it easy.

Posted by LaBlogga at 8:58 AM View Comments

Labels: 3-D printing, automated nourishment, automation, food, forecast, future, health, nanofactories

Sunday, June 27, 2010

Questions uplevel the web

A new internet trend has popped up – asking questions to friends and strangers. Questions are a light-weight means of bringing structure and context to social interaction.

A new internet trend has popped up – asking questions to friends and strangers. Questions are a light-weight means of bringing structure and context to social interaction.

Many people start off with a low level of engagement (e.g.; what is the best pizza place in your neighborhood?). Things can start scaling up (e.g.; how much does it cost for a family to live in London on average?) and become quite detailed (e.g.; can a publisher see the average CPC or eCPM of a particular ad or advertiser on AdSense?). More importantly, more complex thoughts, opinion, philosophy, and value systems are being elicited (e.g.; what is a good real-world example of the "prisoner's dilemma" in recent history?).

The question-asking trend began with Hunch asking users questions to draw a taste profile map for users. The current generation of the concept features sites like Quora and formspring where users ask each other questions. Users have profiles and friending/following capability, with their questions and answer activity appearing in a status feed. It is like a friend network with topic precision.

Metrics would be interesting, such as the level of semantic complexity of questions, the number of repeated questions (an indicator of what is on the collective mind), and the ratio of asked to answered questions.

Question networks could be the real start of the semantic web, the natural automated approach (akin to Google’s automated machine learning) for creating the next higher level of information density.

Posted by LaBlogga at 7:54 AM View Comments

Labels: alternative intelligence, communication, elicitation, information density, interaction, next-generation internet, questions, semantic web, values

Sunday, June 20, 2010

Crisis telediagnosis mobile app demo

![]() Technology-driven tools are evolving so that crisis response can be more participatory, and have more advance preparedness. A demo of the Triage4G mobile app (Android and web platforms) occurred at the Clear 4G WiMAX Developers Symposium held at Stanford University, June 15, 2010. The Triage4G app features live video-conferencing and real-time matching between first responders/crisis victims and remote physicians for telediagnosis. (Application download)

Technology-driven tools are evolving so that crisis response can be more participatory, and have more advance preparedness. A demo of the Triage4G mobile app (Android and web platforms) occurred at the Clear 4G WiMAX Developers Symposium held at Stanford University, June 15, 2010. The Triage4G app features live video-conferencing and real-time matching between first responders/crisis victims and remote physicians for telediagnosis. (Application download)

Posted by LaBlogga at 10:01 AM View Comments

Labels: 4G, android, clearinghouse, crisis, emergency response, mobile apps, triage, WiMAX

Sunday, June 13, 2010

Dollar Van Demos

Easily the phreshest idea from New York’s Internet Week, held in Manhattan June 7-14, was Dollar Van Demos! Dollar Van Demos are a showcase of musicians, rappers, and comedians performing inside a dollar van with real passengers. Numerous Brooklyn-based artists are featured in a collection of videos filmed inside dollar vans as they travel along their usual transportation routes.

Easily the phreshest idea from New York’s Internet Week, held in Manhattan June 7-14, was Dollar Van Demos! Dollar Van Demos are a showcase of musicians, rappers, and comedians performing inside a dollar van with real passengers. Numerous Brooklyn-based artists are featured in a collection of videos filmed inside dollar vans as they travel along their usual transportation routes.

The site has new videos from Cocoa Sarai, Tah Phrum Duh Bush, Grey Matter, Zuzuka Poderosa, Kid Lucky, Atlas, BIMB Family, I-John, Top Dolla Raz, Hasan Salaam, illSpokinn, Joya Bravo, Bacardiiiii and Jarel soon!

Posted by LaBlogga at 11:41 AM View Comments

Labels: artist, brooklyn, conference, crowdsourcing, innovation, local artists, multi-use transportation, music, new york

Sunday, June 06, 2010

Rational growth in consumer genomics

The overall tone of the Consumer Genetics Show, held June 2-4, 2010 in Boston MA, was a pragmatic focus on the issues at hand as compared with the enthusiasm and optimism that had marked the conference’s inaugural event last year. One of the biggest shifts was the new programs that some top-tier health service providers have been developing to include genetic testing and interpretation in their organizations. It also became clear that the few widely-agreed upon success stories for genomics in disease diagnosis and drug response (i.e.; warfarin dosing) have been costly to achieve and will not scale to all diseases and all drugs. Cancer continues to be a key killer app for genomics in diagnosis, treatment, prognosis, cancer tumor sequencing, and risk prediction. Appropriate approaches to multigenic risk assessment for health risk and drug response remain untackled. Greater state and federal regulation seems inevitable. Faster-than-Moore’s-law improvements in sequencing costs continue as Illumina dropped the price of whole human genome sequencing for the retail market from $48,000 to $19,500.

The overall tone of the Consumer Genetics Show, held June 2-4, 2010 in Boston MA, was a pragmatic focus on the issues at hand as compared with the enthusiasm and optimism that had marked the conference’s inaugural event last year. One of the biggest shifts was the new programs that some top-tier health service providers have been developing to include genetic testing and interpretation in their organizations. It also became clear that the few widely-agreed upon success stories for genomics in disease diagnosis and drug response (i.e.; warfarin dosing) have been costly to achieve and will not scale to all diseases and all drugs. Cancer continues to be a key killer app for genomics in diagnosis, treatment, prognosis, cancer tumor sequencing, and risk prediction. Appropriate approaches to multigenic risk assessment for health risk and drug response remain untackled. Greater state and federal regulation seems inevitable. Faster-than-Moore’s-law improvements in sequencing costs continue as Illumina dropped the price of whole human genome sequencing for the retail market from $48,000 to $19,500.

There was generally wide agreement that from a public health perspective, personalized genomics is scientifically valid, clinically useful, and reimbursable in specific situations, but not universally – at this time, better information should be obtained for some people, not more information for all people. The focus should be on medical genetics strongly linked to disease, and on pharmacogenomics in treatment. For example, genomic analysis is required for some drugs by the FDA (maraviroc, cetuximab, trastuzumab, and dasatinib), and recommended for several others (warfarin, rasburicase, carbamazepine, abacavir, azathiprine, and irinotecan). A key point is to integrate the drug test with the guidance for drug dosage.

Even when genomic tests are inexpensive enough to be routine, interpretation may be a bottleneck as each individual’s situation is different when taking into account family history, personal medical history, and environmental and other factors. One idea was that the 20,000 pathologists in the US could be a resource for genomic test interpretation; pathologists are already involved as they must certify genetic test data in CLIA labs. Genomic tests and their interpretation would likely need to be standardized and certified in order to be reimbursed in routine medical care. A challenge is that health service payers are not interested or able to drive genomic test product design.

Key science findings

1. The Regulome and Structural Variation

Michael Snyder presented important research that the regulome, the parts of the genome located around the exome (the 1-2% of the genome that codes for protein), may be critical in understanding disease genesis and biological processes. The complexities of RNA are just beginning to be understood. It is known that there is more than just the simple transcription of DNA to RNA involved in controlling gene expression. For example, there is also tight regulation in splicing newly synthesized RNA molecules into the final RNA molecule and in translating messenger RNA to ribosomes to create proteins. Research findings indicate a global/local model of gene regulation, that there are master regulators with universal reach and local regulators operating on a local range of 200 or so genes.

Snyder also presented updates on his lab’s ongoing research into the structural variation of the human genome. A high-resolution sequencing study has been conducted regarding the amount of structural variation in humans, finding that there are ~1,500 structural variations per person that are over 3 kilobases long and that the majority of the structural variations are 3-10 kilobases long with a few extending to 50-100 kilobases (application of this research: Kasowski M, Science, 2010 Apr 9).

2. Reaching beyond the genome to the diseasome, proteome, and microbiome

Several scientists addressed the ways in which science is quickly reaching beyond the single point mutations and structural variation of the genome to other layers of information. There is a need for the digital quantification of the epigenome, the methylome, the transcriptome, the proteome, the metabolome, and the dieaseome/VDJome. For example, the immune system is one of the best monitors of disease state and progression. The strength of individual immune systems can be evaluated through the VDJome (the repertoire of recombined V-D-J regions in immune cells; cumulative immunoglobulin and T-cell receptor antigen exposure)

There are many areas of interest in proteomics including protein profiling, protein-protein interactions, and post-translational modification. A large-scale digital approach to proteomics was presented by Michael Weiner of Affomix. A key focal area is post-translational modification. At least one hundred post-translational modifications have been found, and two are being investigated in particular: phosphorylation (the signal transduction can possibly indicate tumor formation) and glycosylation (possibly indicating tumor progression).

The microbiome (human microbial bacteria) and host-bacteria interactions are an important area for understanding human disease and drug response, and for Procter & Gamble in creating consumer products. The company has basic research and publications underlying products such as the ProX anti-wrinkle skin cream (Hillebrand, Brit Jrl Derm, 2010), rhinovirus, and gingivitis. The company has a substantial vested interest in understanding the microbiome with its variety of nasal, oral, scalp, respiratory, skin, and GI tract-related products.

Posted by LaBlogga at 8:21 AM View Comments

Labels: conference, consumer genetics, diseasome, Genomics, microbiome, personal genome, proteome, regulome, VDJome

Sunday, May 30, 2010

Microbubbles and photoacoustic probes energize cancer researchers

The Canary Foundation’s eighth year of activities was marked with a symposium held at Stanford University May 25-27, 2010. The Canary Foundation focuses on the early detection of cancer, specifically lung, ovarian, pancreatic, and prostate cancer, in a three-step process of blood tests, imaging, and targeted treatment.

The Canary Foundation’s eighth year of activities was marked with a symposium held at Stanford University May 25-27, 2010. The Canary Foundation focuses on the early detection of cancer, specifically lung, ovarian, pancreatic, and prostate cancer, in a three-step process of blood tests, imaging, and targeted treatment.

Imaging advances: microbubbles and photoacoustic probes

Imaging is an area that continues to make advances. One exciting development is the integration of multiple technologies, for example superimposing molecular information onto traditional CT scans. Contemporary scans may show that certain genes are over-expressed in the heart, for example, but obscure the specific nodule (tumor) location. Using integrins to bind to cancerous areas may allow their specific location to be detected (4 mm nodules now, and perhaps 2-3 mm nodules as scanning technologies continue to improve).

Other examples of integrated imaging technologies include microbubbles, which are gassy and can be detected with an ultrasound probe as they are triggered to vibrate. Similarly, photoacoustic probes use light to perturb cancerous tissue, and then sound detection tools transmit the vibrations. Smart probes are being explored to detect a variety of metaloproteases on the surface of cancer cells, breaking apart and entering cancer cells where they can be detected with an ultrasound probe.

Systems biology approaches to cancer

Similar to aging research, some of the most promising progress points in cancer research are due to a more systemic understanding of disease, and the increasing ability to use tools like gene expression analysis to trace processes across time. One example is being able to identify and model not just one, but whole collections of genes that may be expressed differentially in cancers, seeing that whole pathways are disrupted, and the downstream implications of this.

Cancer causality

Also as in aging research, the 'chicken or the egg' problem arises as multiple things that go wrong are identified, but which happens first, and causality, is still unknown. For example, in ovarian cancer, where there are often mutations in the p53 gene, and gene rearrangements and CNV (copy number variation; different numbers of copies of certain genes), but which occurs first and what causes both is unknown.

Predictive disease modeling

There continues to be a need for models that predict clinical outcome, and serve as accurate representations of disease. DNA and gene expression, integrated with traits and other phenotypic data in global coherent datasets could allow the ability to build probabilistic causal models of disease. It also may be appropriate to shift to physics/accelerator-type models to manage the scale of data now being generated and reviewed in biomedicine.

Posted by LaBlogga at 3:10 PM View Comments

Labels: cancer, conference, gene expression, imaging, microbubbles, modeling, pathways, photoacoustic probe, probabilistic models, systems biology

Email me

Email me Twitter

Twitter MS Futures Group

MS Futures Group Data Visualization Wiki

Data Visualization Wiki Economic Fallacies

Economic Fallacies